Authors: Constantin Dalyac, Loïc Henriet and Christophe Jurczak

The question of when Quantum Computers will become powerful enough for companies to start exploring their use in production is one of the most hotly debated topics in the field. The QC community has come up with concepts such as quantum supremacy, quantum advantage and quantum utility along with metrics such as quantum volume, Q-score and many others to measure progress. Additionally, complexity theory has been called to provide performance guarantees. However, what ultimately matters is systematic benchmarking with respect to the best solutions used in production for real-world problems of interest, whether scientific, industrial, or commercial.

At Pasqal we believe this is crucial to guide research, track progress, and advance our understanding of quantum algorithms. We have put together multidisciplinary teams (quantum sciences, computer sciences, condensed matter physics, operations research, AI …) to address three main classes of applications: optimization, simulation and machine learning. For each class we develop original theoretical research with our academic and industrial partners, coupled with emulation and empirical research using our own Quantum Processing Units (QPUs). This approach lets us bridge the gap between quantum computing and domain problems in a comprehensive way, allowing for optimal use of quantum resources on one side and deep understanding of algorithm performance and domain relevance on the other side. In doing so, we ensure that our research not only pushes the boundaries of quantum computing but also translates into meaningful advancements in real-world applications.

Creating Meaningful Benchmarks

A key aspect is to build classically hard benchmark problems before benchmarking them on QPUs. Indeed, a critical challenge has been that past comparisons (especially in optimization) are often based on overly simple problem instances, leading to misleading speed-up claims. Just as judging a chef’s skill is more meaningful when preparing a complex dish like “boeuf bourguignon”rather than simply boiling an egg, we need harder problem instances to truly assess quantum computing’s capabilities. This way we’re making sure that we’re not restricted only to low-hanging fruits and proofs of concepts that can’t be generalized.

This paper is the first in a series of three, focusing on combinatorial optimization running on our neutral atom platform in analog mode. It aims to address three legitimate questions:

- Are today’s quantum computers faster or slower than classical solvers across all tested instances for an equivalent level of accuracy?

- Based on these results, can we extrapolate the problem size at which quantum computers might outperform classical solvers in terms of walk-clock time for achieving an equivalent accuracy?

- Besides atom number, what are the key hardware performance metrics that need to improve to reach this level of performance?

Our Methodology and Findings

To answer these questions, we leverage insights from complexity theory to design harder instances of a well-known problem in graph theory and combinatorial optimization, the maximum independent set (MIS) problem on unit-disk graphs. This problem involves finding the largest set of points that are all separated by at least a minimum distance – a challenge that appears in many real-world applications like wireless network design and molecular structure analysis. We benchmarked these problems on our Orion Alpha quantum processor using 100 qubits and gathering 100,000 experimental data points. This ensures a meaningful evaluation of our quantum hardware capabilities.

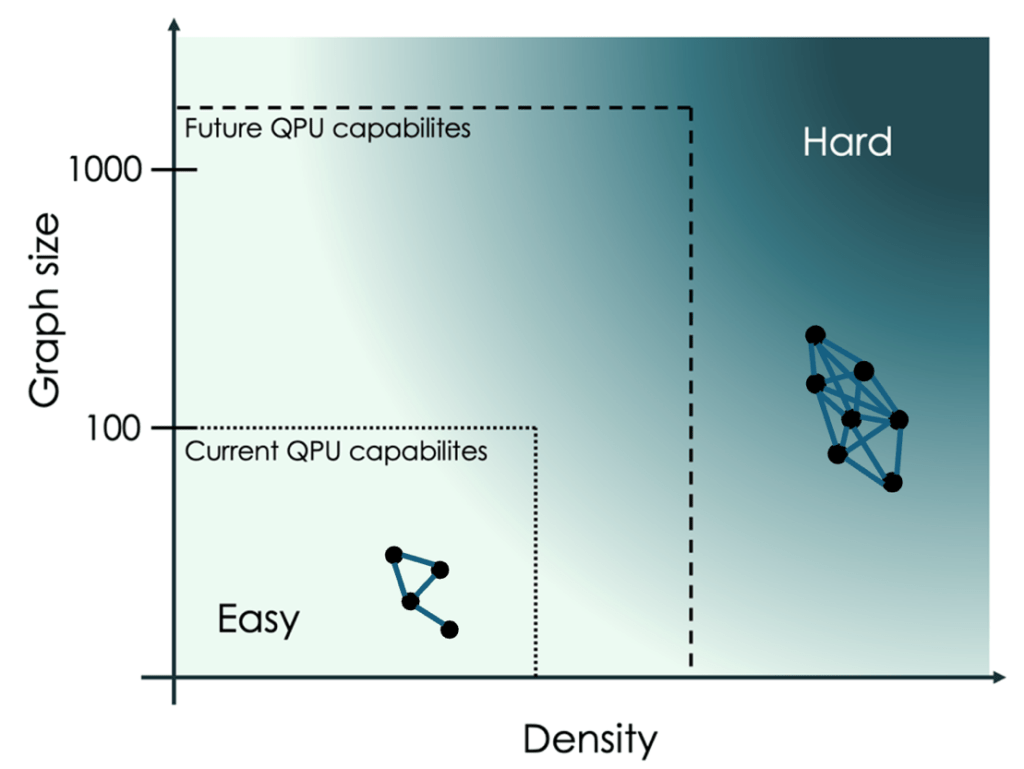

Graphs with higher density and bigger size are harder to solve classically. Current QPU capabilities (dotted line) are limited to relatively small, low-density graphs. Future QPU advancements (dashed line) are expected to push the boundaries, enabling the resolution of larger and denser problems that are much harder.

Comparing these results with classical state-of-the-art solvers used routinely by our industrial customers (EDF, Thales, CMA CGM, RTE, BMW) provides valuable insights into the key factors limiting current quantum performance and the engineering challenges that need to be addressed.

- Repetition rate is key: while quantum state preparation occurs at the MHz scale, bottlenecks in loading time, spatial arrangement, and imaging reduce currently the effective clock rate to just a few Hz. We have the designs at Pasqal to improve atom reservoir loading, assembly algorithms, and fast imaging techniques to push this towards the kHz range.

- Scaling to thousands of atoms is essential: larger problem instances will expose prohibitive classical runtimes. We have experimentally trapped 1100+ atoms and up to 6,100 atom arrays have been demonstrated in academic labs, with a clear path for scalability.

These challenges are engineering problems with clear solutions and Pasqal’s teams are already addressing them to improve the next generation of products.

The path forward

Although quantum advantage for MIS on neutral atom systems has yet to be achieved for large and dense-enough graphs of industrial interest, the roadmap is increasingly clear. With continued improvements in hardware scalability, interaction programmability, and experimental efficiencies, neutral atom quantum processors will soon be able to compete more effectively with classical approaches for a well-defined class of complex combinatorial problems. We believe this research will focus the quantum computing community on benchmarking more challenging problem instances, driving further progress toward achieving practical quantum advantage. In upcoming work, we will present additional benchmarks in different applications, where quantum advantage appears even more promising.

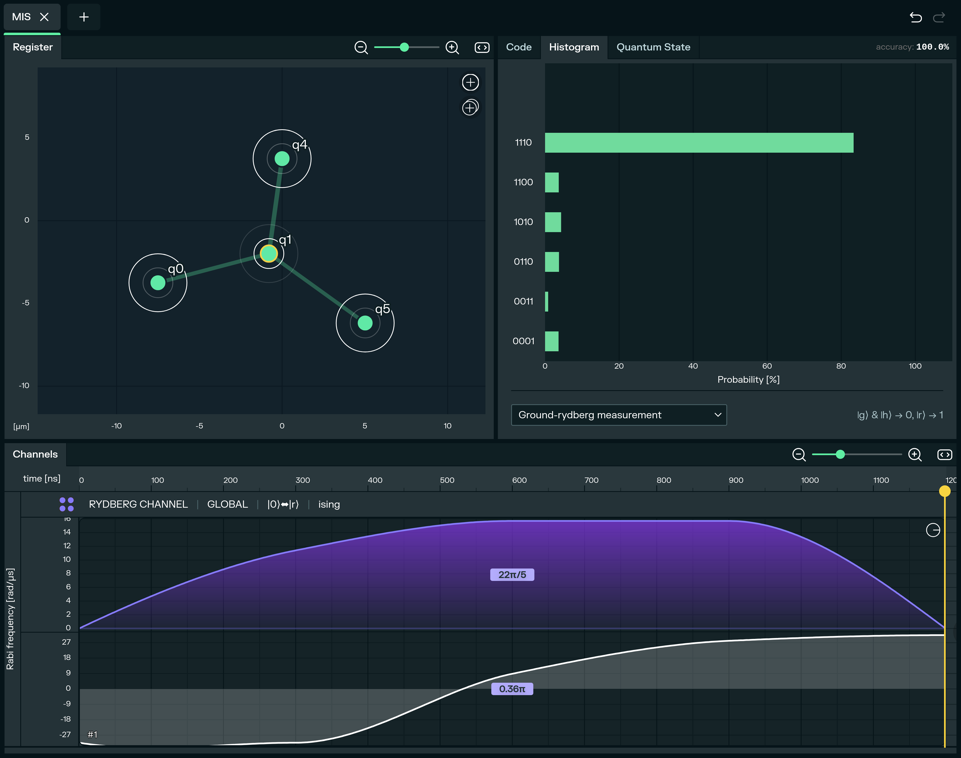

Seamlessly programming the MIS problem on Pasqal’s QPU using Pulser Studio, an intuitive no-code platform.

This research is the result of a combined effort from our hardware, software, and benchmarking teams.

We thank our partners and collaborators who share our vision of making quantum computing a reality.